Building a 3D Mind Palace to Understand Vector Embeddings

I'm the kind of learner who has to see something to really grasp it. If I can spin it around, poke at it, and give it a bit of colour-coding, I relax, and things start to click. This weekend, I hacked together a little app to get my head around vector embeddings. I’ve recently built Retrieval Augmented Generation capability into Alvia - the voice interview assistant I’ve been building - and I felt the need to further my intuitive understanding. Instead of abstract concepts, I wanted a practical build to help with grounding.

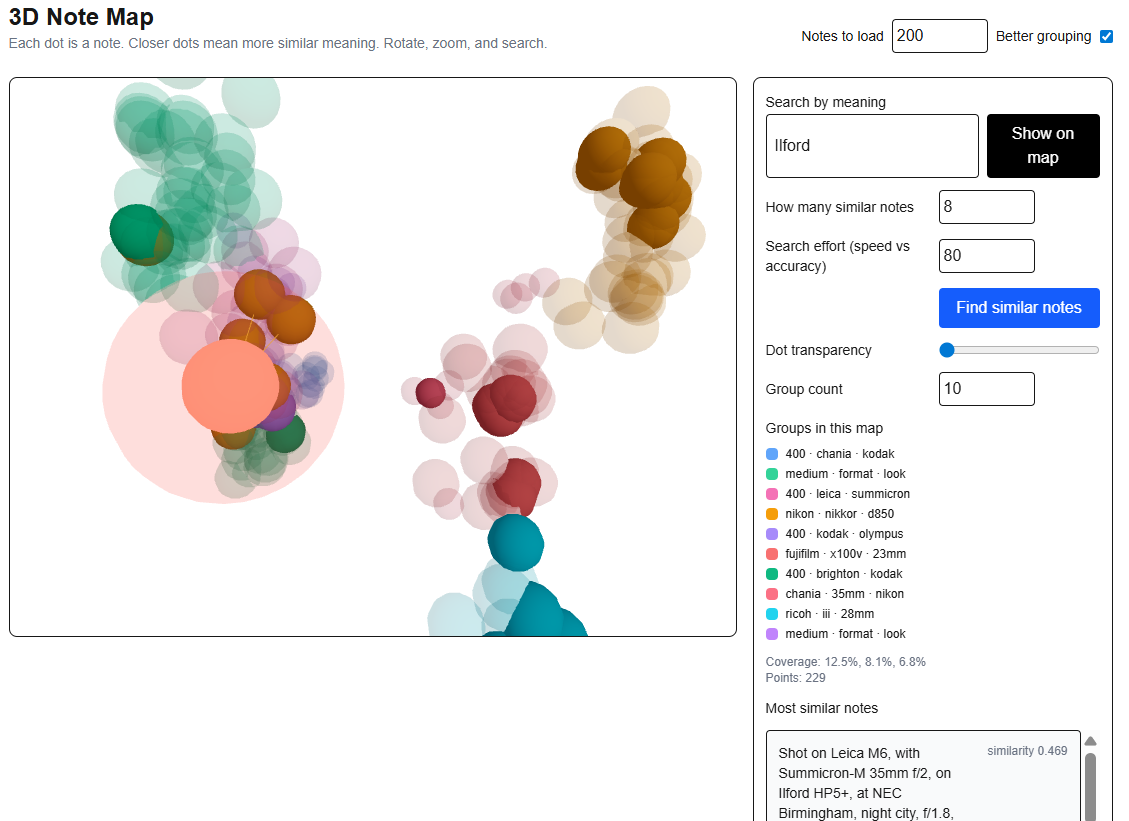

The app I built in a couple of hours vibing with GPT5 in Cursor, gives a proper 3D map that you can fly through, turning complex relationships between data-points into neighbourhoods of ping-pong balls.

I loaded it with a nerdy dataset: about a thousand short notes describing photgraphs I’ve taken; from the equipment used (camera, lenses, film stocks), locations, subjects, right down to exposure settings. Each note becomes a little ball in space. Balls close together mean they're similar, making the whole thing intuitive and human. Check out the screenshot; a tiny galaxy of camera gear.

What did I build?

It's just a small Next.js app, but it does a few clever things:

You add short notes, the app turns them into embeddings, then saves them in Supabase using pgvector.

You can search by meaning in plain English, with the app finds related notes and highlighting their proximity.

The notes live in a 3D scatter plot. You rotate, zoom, click on dots, and actually read the notes.

Your search query appears as a red dot, linked by amber lines to the closest matching notes.

Clusters get labelled with quick keywords, you decide how many clusters you want, and tweak transparency to keep things clear.

There's a speed vs accuracy slider, so you choose how thorough or quick the search is.

All the fiddly stuff (like how many notes to display, vector normalisation, etc.) sits neatly in a sidebar, keeping things simple and practical.

Why this helps visual thinkers

Embeddings usually mean dealing with nodes in multi-dimensional vector space; 1536 dimensions in this case, and unless you’re Steven Hawking, that's just abstract regret waiting to happen. So, the app squishes those dimensions down into 3:

Portrait shots cluster neatly together, Brighton night photos group elsewhere, and my Ilford HP5 notes naturally huddle with other black-and-white 400-speed film.

Searching "Ilford" lights up a small neighbourhood, visually highlighting both perfect matches and near-misses. It feels real.

There’s an intuitive power in being able to walk around your notes and ideas in this way, and whilst it’s not a hugely practical feature, it does bring help to bring a concept to life.

Under the bonnet

Embeddings: OpenAI’s

text-embedding-3-largemodel turns text into 1536-dimensional vectors.Similarity search: Uses cosine distance in pgvector, sped up with HNSW indexing. Adjustable trade-off between speed and accuracy.

Dimensionality reduction: PCA squeezes vectors down to 3D. A “coverage” hint shows how accurately the projection represents your data.

Interface: A 3D scatter plot coloured by cluster, with a side panel showing top matches and their similarity scores.

API: Simple endpoints for ingesting notes, embedding text, and performing searches.

AI coding tools as a learning aid

Using AI for building completely changes the way I learn. Building in Cursor feels collaborative and conversational, dramatically reducing the gap between theory and practical understanding. It lets me quickly prototype, see results, and genuinely understand complex concepts through active exploration.

A digital mind palace

I regularly use mind palaces to prep for talks, mapping ideas onto physical locations. This embedding map works on similar principles:

Rooms = clusters: One location for "400-speed black-and-white film," another for "night street photography with my Leica."

Doorways = connections: Amber lines show the links between ideas.

Anchors = dots: Dropping a query like "Ilford" is literally picking the starting point of your mental walk.

Flying around the 3D space for a few minutes plants everything spatially in your memory, far better than rote learning.

How to build one yourself

You don't need permission or a special qualification—just a free evening and a decent cuppa:

Gather 500–1000 short notes about something you know well—recipes, running logs, customer feedback, guitar pedals—whatever works.

Whip up a tiny Next.js app with pages to add notes and explore the 3D map.

Use Supabase + pgvector for storing and searching your embeddings.

Pick one consistent embedding model for notes and queries.

Project your embeddings into 3D with PCA, add a normalisation toggle and coverage hints.

Plot everything as a scatter plot, colour-coded by cluster, with a simple search box.

Ship quickly and iterate.

Got your notes in a CSV already? Batch upload them with a simple endpoint.

A few lessons learned

Visualising embeddings genuinely speeds up understanding.

Projections aren't perfect, trust general groupings more than precise distances.

Normalising vectors pre-PCA often gives clearer clusters.

Exposing the speed vs accuracy slider to users is genuinely useful.

Build first, intellectualise later.

What next?

Experimenting with UMAP and t-SNE projections for different clustering vibes.

Adding queries that blend examples ("like these, but not those").

Labelling clusters with friendly LLM-generated summaries.

Swapping out my photo dataset for interview notes to dynamically guide conversations.

If you learn visually too, quit reading endless threads on embeddings. Just build your own 3D mind palace.